Google On Fixing Discovered Currently Not Indexed

According to Google's John Mueller, eliminating non-indexed sites will help fix the Discovered Currently Not Indexed problem.

Google's John Mueller responded to the question of whether deleting pages from a huge site helps to solve the problem of sites identified by Google but not indexed. John provided broad advice on how to resolve this problem.

Discovered but not yet indexed

Google Search Console is a service that conveys search-related issues and feedback.

The indexing status of a site is a key aspect of the search console since it informs a publisher how much of a site is indexed and suitable for ranking.

The Page Indexing Report in the search console shows the status of web page indexing.

A notice that a page was identified but not indexed by Google is frequently an indication that an issue needs to be solved.

There are several reasons why Google may discover but not index a page, however Google's official documentation only specifies one.

"Discovered but not yet indexed"

Google discovered the page but has not yet crawled it.

Normally, Google would crawl the URL, but this would overburden the site, thus Google postponed the crawl.

This is why the report's last crawl date is blank."

Google's John Mueller elaborates on why a page could be discovered but not indexed.

De-indexing Non-indexed Pages to Enhance Overall Indexing?

There is a theory that deleting some pages will aid Google in crawling the remainder of the site by giving it less pages to crawl.

There is a widespread misconception that Google has a finite crawl capacity (crawl budget) for each site.

Googlers have said on several occasions that there is no such thing as a crawl budget in the sense that SEOs understand it.

Google considers a lot of factors when determining how many pages to crawl, including the website server's capacity to handle intensive crawling.

One of the reasons Google is picky about how much it crawls is that Google does not have the storage capacity to save every single webpage on the Internet.

As a result, Google tends to index sites with some value (if the server can handle it) while not indexing other pages.

For additional information on Crawl Budget, see Google Provides Crawl Budget Insights.

"Will deindexing and consolidating 8M utilized items into 2M unique indexable product pages help enhance crawlability and indexability (Discovered - presently not indexed problem)?" was the inquiry.

Google's John Mueller agreed that it was not possible to treat the individual's unique issue before making broad advice.

"It's difficult to say," he said.

I'd recommend reading the huge site's crawl budget guidance in our documentation.

For large sites, crawling more may be restricted by your website's ability to handle additional crawling.

In most cases though, it’s more about overall website quality.

Are you considerably enhancing the overall quality of your website by reducing the number of pages from 8 million to 2 million?

Unless you focus on improving the real quality, it's simple to spend a lot of effort lowering the number of indexable pages while not actually enhancing the website, which would not benefit search."

Mueller Provides Two Causes for the Found Not Indexed Issue

Google's John Mueller provided two reasons why a page can be discovered but not indexed.

- Server Capacity

- Overall Website Quality

1. Server Capacity

According to Mueller, Google's capacity to crawl and index webpages is "limited by how your website can handle extra crawling."

The more bots it takes to crawl a website, the larger it becomes. To make matters worse, Google isn't the only bot crawling a major website.

Other genuine bots, such as those from Microsoft and Apple, are also attempting to crawl the site. There are also several additional bots, some of which are valid and others which are tied to hacking and data scraping.

It implies that for a large site, especially during the late hours, hundreds of bots may be consuming website server resources to crawl the site.

As a result, one of the first questions I ask a publisher that is experiencing indexing issues is about the status of their server.

In general, a website with millions, or even hundreds of thousands, of pages would require a dedicated server or a cloud host (because cloud servers offer scalable resources such as bandwidth, GPU and RAM).

In order to assist the server manage with excessive traffic and prevent 500 Error Response Messages, a hosting environment may require additional memory given to a process, such as the PHP memory limit.

Analyzing a server error log is part of troubleshooting.

2. Overall Website Quality

This is an intriguing explanation for why not enough pages are indexed. Overall site quality is similar to a score or determination assigned by Google to a website.

Website Components Can Influence Overall Site Quality

According to John Mueller, a section of a website can influence the overall site quality determination.

Mueller stated:

"...for some things, we consider the overall quality of the site."

And when we look at the overall quality of the site, it makes no difference to us why you have significant portions that are lower quality.

...if we notice that there are significant parts that are of lower quality, we may conclude that this website is not as fantastic as we thought."

Site Quality Definition

In another Office Hours video, Google's John Mueller defined site quality as follows:

"When we talk about content quality, we don't just mean the text of your articles.

It's all about the overall quality of your website.

Everything from the layout to the design is included.

Like, how you present things on your pages, how you integrate images, how you work with speed, all of those things come into play."

How Long Does It Take to Determine Site Quality Overall?

Another fact about how Google determines site quality is the length of time it can take. It can take months.

Mueller stated, "

"It takes us a long time to understand how a website fits in with the rest of the Internet."

...And that can easily take, I don't know, a couple of months, a half-year, or even more than a half-year..."

Crawling and Indexing Optimisation of a Website

Optimizing an entire site or a section of a site is a high-level approach to the problem. It is frequently necessary to optimize individual pages on a scaled basis.

Optimization can take several forms, especially for e-commerce sites with thousands of millions of products.

Things to keep an eye out for:

The Main Menu

Make sure the main menu is optimized to direct users to the most important sections of the site. The main menu can also provide access to the most popular pages.

Include a link to popular sections and pages.

A prominent section of the homepage can also link to the most popular pages and sections.

This not only helps users find the pages and sections that are most important to them, but it also tells Google that these are important pages that should be indexed.

Enhance Thin Content Pages

Thin content is defined as pages that have little useful content or are mostly duplicates of other pages (templated content).

It is not sufficient to simply fill the pages with words. Words and sentences must be meaningful and relevant to site visitors.

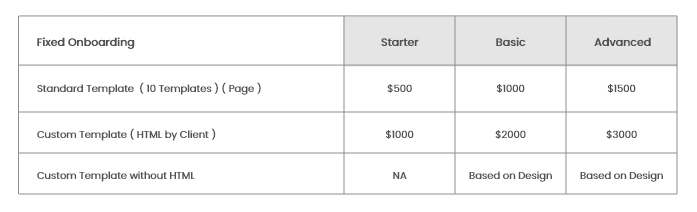

While you handle all of the non-technical aspects of SEO for Enterprise sites, Hocalwire CMS handles the technical aspects of maintaining a large sitemap, indexing pages for Google, optimizing page load times, maintaining assets and file systems, and warning for broken links and pages. These are significant value additions if you're looking for an enterprise-grade content management system. Get a Free Demo of Hocalwire CMS to learn more.